10-7 ROC曲线

代码

回顾前面学过的代码

def TN(y_true, y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true == 0) & (y_predict==0)) # 注意这里是一个‘&’

def FP(y_true, y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true == 0) & (y_predict==1))

def FN(y_true, y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true == 1) & (y_predict==0))

def TP(y_true, y_predict):

assert len(y_true) == len(y_predict)

return np.sum((y_true == 1) & (y_predict==1))

def confusion_matrix(y_true, y_predict):

return np.array([

[TN(y_true, y_predict), FP(y_true, y_predict)],

[FN(y_true, y_predict), TP(y_true, y_predict)]

])

def precision_score(y_true, y_predict):

tp = TP(y_true, y_predict)

fp = FP(y_true, y_predict)

try:

return tp / (tp + fp)

except: # 处理分母为0的情况

return 0.0

def recall_score(y_true, y_predict):

tp = TP(y_true, y_predict)

fn = FN(y_true, y_predict)

try:

return tp / (tp + fn)

except:

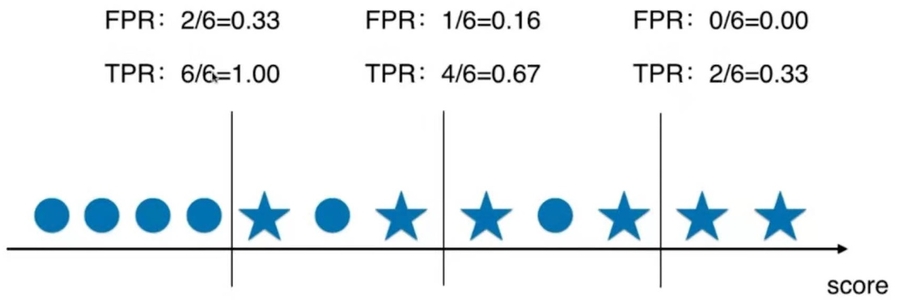

return 0.0TPR和FPR

加载测试数据

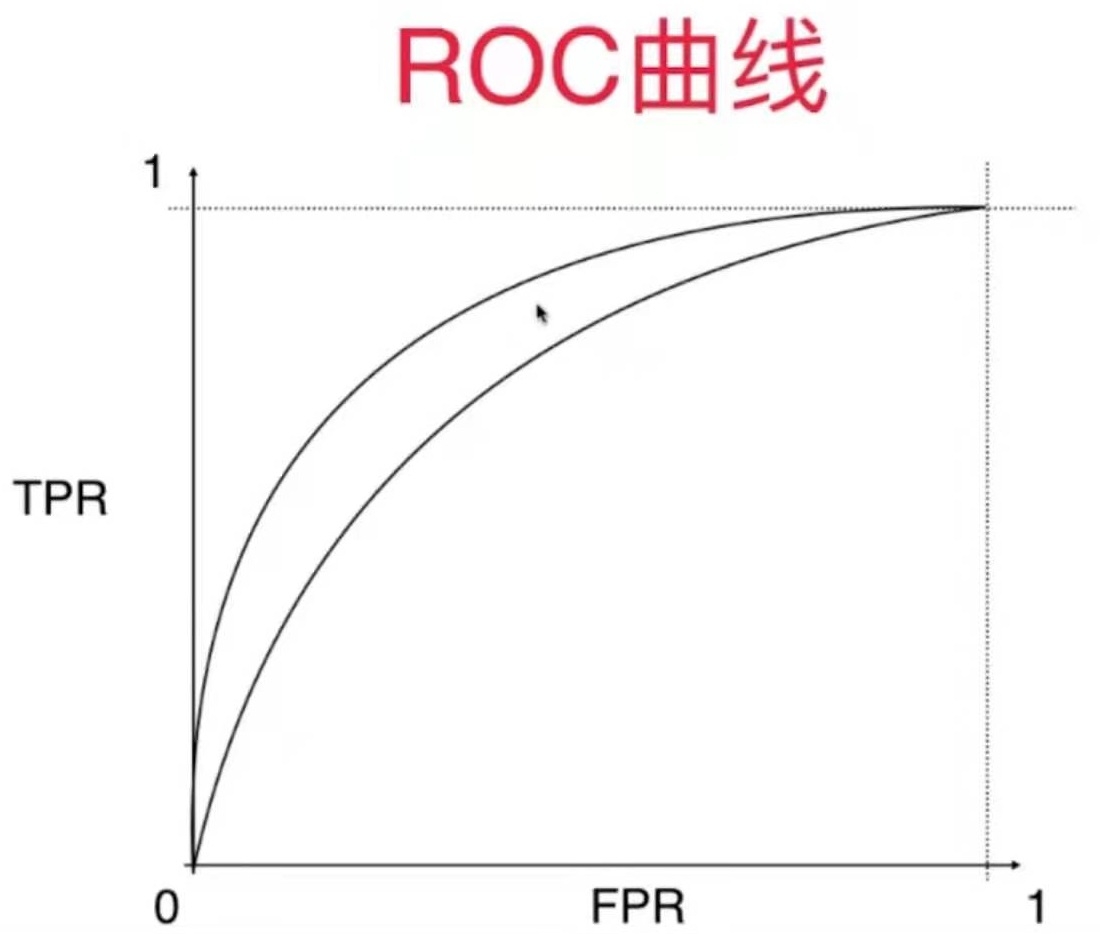

绘制TFP和FRP的曲线,即ROC

sklearn中的ROC曲线

ROC score

总结

Last updated